Many organisations have already adopted AI for translation. They have tested popular tools, integrated APIs, and encouraged teams to “use AI wherever possible.” On paper, the promise is compelling: faster turnaround times, lower costs, and the ability to scale multilingual content without expanding headcount.

In real business environments, the results often fall short of expectations.

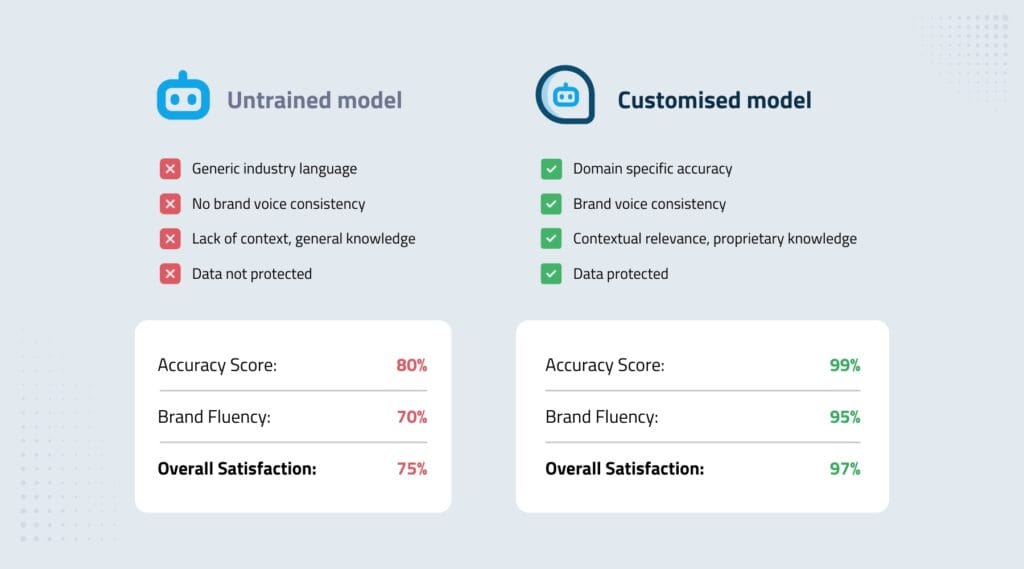

Generic AI models struggle with domain-specific terminology, inconsistent brand voice, and the nuanced language required in regulated or high-stakes content. Outputs may appear fluent at first glance, yet contain subtle inaccuracies, tonal drift, or terminology errors that undermine trust and usability. As translation volumes increase, these issues compound rather than disappear.

This is where AI fine-tuning through our Custom AI Translation service becomes essential, with models adapted using client-specific terminology, reference content, and quality standards instead of generic training data.

Rather than relying on one-size-fits-all models trained on broad, general-purpose data, fine-tuned translation models are adapted to reflect a company’s industry, terminology, style, and real-world use cases. The objective is not experimentation, but reliable, production-ready translation that fits established workflows and quality expectations.

This article explains what AI fine-tuning for translation actually involves, why it matters for real business use, and how organisations can move beyond generic AI tools towards custom models that deliver consistent results at scale.

What AI fine-tuning for translation actually involves

AI fine-tuning for translation is often misunderstood. It is commonly confused with prompt engineering, glossaries, or post-editing layered on top of generic models. While these approaches can influence outputs, they do not change how the model itself understands language.

Fine-tuning does.

In practical terms, fine-tuning adapts a base translation model using curated, domain-relevant data so that preferred terminology, linguistic patterns, and stylistic conventions are learned at the model level. Instead of being repeatedly instructed, the model internalises how content should be translated within a specific business context.

Prompt-based instructions are external and fragile; they depend on consistent user behaviour and tend to degrade as content scales or workflows change. Fine-tuned models behave consistently regardless of who uses them, because the underlying translation behaviour has been adjusted.

For business translation, fine-tuning typically incorporates approved reference content, validated terminology, and real production examples. These inputs shape how the model resolves ambiguity, selects terminology, and maintains tone across languages. The outcome is higher accuracy and predictability — a critical requirement for enterprise translation.

Importantly, fine-tuning does not replace human expertise. It depends on it. Decisions about data selection, terminology handling, and acceptable quality are human-led. Fine-tuning ensures these decisions are reflected consistently in the model’s output, reducing repetitive correction and manual rework.

When fine-tuning becomes necessary

Not every translation task requires a fine-tuned model. For ad hoc or low-risk content, generic AI tools may be sufficient. The need for fine-tuning emerges when translation becomes operational rather than experimental.

This typically occurs when organisations produce high volumes of multilingual content, operate across regulated environments, or rely on precise terminology to maintain clarity and trust. In these scenarios, small inconsistencies are no longer isolated issues; they accumulate and create risk, inefficiency, and review bottlenecks.

Fine-tuning becomes particularly valuable when translation outputs are reused across channels, reviewed by multiple stakeholders, or embedded into downstream systems. Teams need confidence in how the model will behave before content reaches production.

By embedding language preferences and quality standards directly into the model, fine-tuning reduces variability and simplifies governance. Review cycles shorten, quality becomes easier to manage, and AI-assisted translation becomes more dependable.

Moving from experimentation to production-ready translation

AI fine-tuning for translation marks the point where AI stops being an experiment and becomes part of a dependable production workflow. For organisations operating across languages, markets, and regulatory environments, consistency matters as much as speed.

Generic AI tools are designed to perform broadly, not precisely. As translation volumes grow and content becomes more specialised, their limitations become harder to manage. Fine-tuning addresses this by embedding linguistic expectations directly into the model, reducing variability and making output behaviour easier to govern.

The real value of fine-tuning is predictability. Teams can rely on stable terminology, consistent tone, and repeatable quality across content types and markets. Review effort shifts from constant correction to focused oversight, allowing AI to scale execution while human expertise maintains control.

For organisations ready to operationalise AI translation with confidence, fine-tuning provides the foundation needed to move forward without compromising clarity, consistency, or accountability.