While many enterprises are still exploring how best to apply AI, some organisations are already demonstrating what it looks like to deploy the technology at scale—and profitably. In this blog we share our learnings from the various multinational companies we have and continue to work with on executing their AI projects.

The secret: Platform-first, open architecture

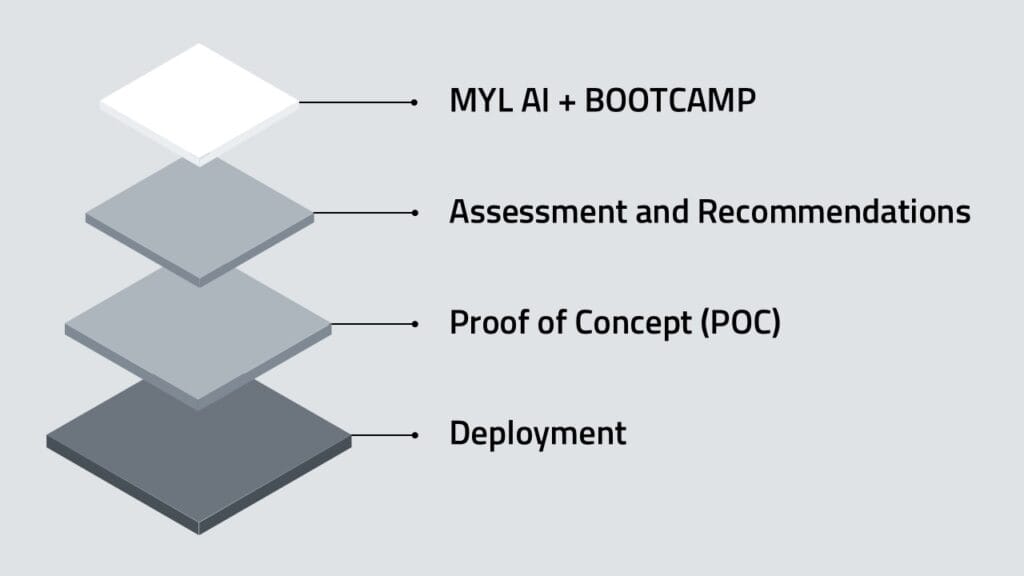

We believe the key to success depends on a platform-first strategy built on open architecture, enabling AI to be embedded and scaled across the organisation. Rather than treating AI as a one-off tool, AI is built into the core of a company’s technology stack. We call this a “Horizontal Platform” as it spans the entire company.

Central to this approach is a self-service data platform that acts as a single source of truth for governance, discoverability, quality, and security. This horizontal platform empowers internal teams with quick, trusted access to data, which is essential for accelerating AI development.

Complementing this is a dedicated AI Protocol Platform, serving as both a knowledge library and tracking system. It ensures that teams across functions can build and deploy AI models efficiently and in compliance with governance standards, while also learning from prior use cases.

Unified AI ecosystem

Once the over-arching platform is in place, it enables rapid growth in AI capabilities. This setup allows companies to:

- Selectively choose which applications benefit vertical sectors of the business

- Scale AI and IT workloads quickly

- Optimise costs by using cloud services where appropriate

- Maintain high availability and resilience

A major design philosophy behind this architecture is vendor agnosticism. By avoiding lock-in to a single provider, the organisation can choose the most effective large language model (LLM) or AI service for each use case. It also allows for real-time monitoring of model performance and dynamic cost optimisation by switching models as more efficient options become available.

What horizontal and vertical use cases look like

We recommend companies structure there AI initiatives into two distinct categories:

- Horizontal use cases (tools accessible to all employees).

- Vertical use cases (targeting specific roles like marketing teams, HR Teams, customer service agents, and relationship managers).

Horizontal use case example

Similar to ChatGPT but customised and managed securely in-house, and available to the entire workforce, MYL built a large language model that helps enforce the company’s brand guidelines for any piece of content that they produce.

Vertical use case example

Using the horizontal architecture, we developed a vertical AI application for the in-house legal team that prepared summaries of board meetings in a specific format, cutting down management time considerably and speeding up the process of dissemination.

AI is a process, not a plug-in

One of the biggest misconceptions in enterprise AI is that success comes from simply adding LLMs to existing systems. In reality, effective integration requires process re-engineering. Before implementing any AI model, the organisation must first define the business outcomes, then adjust workflows to embed AI meaningfully and sustainably. This change management approach brings employees into the transformation journey early, building trust and buy-in from the ground up.

Key takeaways

The path to AI maturity is complex, but the roadmap is becoming clearer. It involves:

- Tech teams working in partnership with end user departments.

- A strong data and model governance framework.

- A flexible, hybrid IT architecture.

- Developing effective vertical AI applications that solve real-world business problems.

- Continuous evaluation and optimisation of AI performance and cost.

Organisations that approach AI not as a one-time tool but as an integrated, evolving platform will be best positioned to unlock real, repeatable business value.